Gaze-independent c-VEP BCI

Context

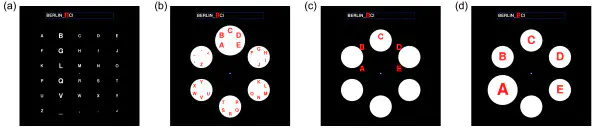

Typically, a brain-computer interface (BCI) necessitates users to direct their gaze towards a target stimulus, such as a symbol on a screen, as seen in a standard matrix speller. This gaze-dependent functionality, however, becomes progressively challenging for certain patient groups, notably individuals with amyotrophic lateral sclerosis (ALS). This research focuses on the development of a gaze-independent BCI, utilizing covert spatial attention in conjunction with innovative machine learning techniques.

While previous studies have explored gaze-independent BCIs leveraging for instance the P300 response or the steady-state visual evoked potential (SSVEP), this project pioneers the investigation of code-modulated visual evoked potentials (c-VEP) in a covert paradigm, a significantly faster alternative. The objective is to eliminate the reliance on voluntary muscle control entirely, culminating in the creation of a swift, reliable, and entirely brain-based assistive device. This pioneering approach holds immense potential for revolutionizing assistive technology for individuals facing challenges with conventional gaze-dependent BCIs.

Image credit: Treder, M. S., & Blankertz, B. (2010). (C)overt attention and visual speller design in an ERP-based brain-computer interface. Behavioral and Brain Functions, 6(1), 1-13.

Research question

This project aims to assess the viability of c-VEP, as measured by EEG, as a dependable control signal for gaze-independent BCI, surpassing chance-level reliability. The investigation further considers the impact of covert attention on the c-VEP response’s loci, anticipating changes such as lateralization. These variations present an opportunity for integration into machine learning methods, enhancing the decoding performance of the BCI.

Furthermore, the study explores the potential contribution of visual alpha oscillations as another source of decodable information. This aspect may necessitate the development of a hybrid decoding approach, providing a comprehensive understanding of the intricate interplay between c-VEP responses and visual alpha oscillations in the context of gaze-independent BCIs.

Additionally, we have data in which we ran a covert c-VEP protocol, together with a simultaneous P300 oddball task. This means that alongside the c-VEP, it should be possible to decode the P300 as well, signifying the attended side.

Moreover, adding simultaneous eye tracking may offer a twofold advantage: serving as a tool to confirm stable fixation and providing an alternative data source. Existing literature indicates that covert spatial attention influences involuntary fixational eye movements, notably micro-saccades, directing them toward the attended side. This phenomenon emerges as an additional avenue for extracting decodable information. Furthermore, the dilation of the pupil is also influenced by spatial attention, introducing yet another feature worthy of investigation in this comprehensive exploration.

Finally, each of the aforementioned signals (c-VEP, alpha, P300, eye-tracking), can be used in isolation, but their contributions can also be merged in an ensemble classifier. In this way, a hybrid BCI could emerge that performs better than the BCI that uses only one input domain.

Literature

- Narayanan, Ahmadi, Desain & Thielen (2024). Towards gaze-independent c-VEP BCI: A pilot study. Proceedings of the 9th Graz Brain-Computer Interface Conference 2024. DOI: https://doi.org/10.3217/978-3-99161-014-4-060

Skills / background required

- Very proficient in Python

- Proficient in machine learning

- Knowledge/interest in vision neuroscience