Code-modulated BCI paradigms

Context

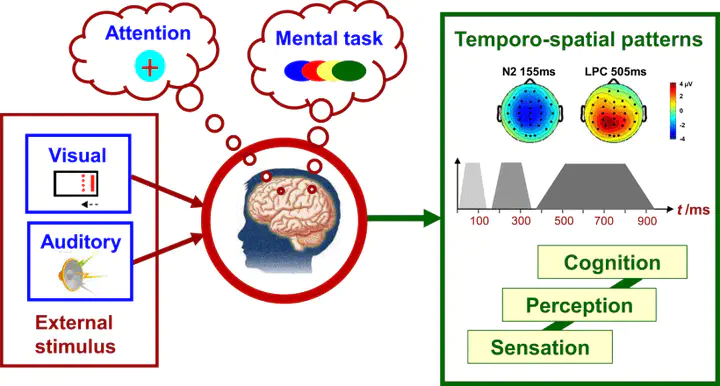

A brain-computer interface (BCI) can use a diverse array of control signals, decodable from measured electroencephalogram (EEG) data. One such control signal is the code-modulated evoked potential, which is the response to pseudo-random sensory events. For instance, the code-modulated visual evoked potential (c-VEP) is recognized as one of the most accurate and fastest non-implanted BCIs in current literature.

Nevertheless, a standard c-VEP BCI faces challenges such as the dependence on the ability to change one’s gaze position, that is, to make eye movements. Because several people lost this ability, such as people living with ALS, it becomes imperative to explore alternative code-modulated paradigms.

One such alternative, still in the visual domain, is using covert spatial attention, which does not rely on eye movements. Alternatively, other sensory modalities, like auditory or tactile, may hold potential as well.

These alternatives remain largely unexplored in the context of code-modulated paradigms. Investigating the potential of covert visual, auditory and tactile modalities could pave the way for enhanced BCI accessibility and effectiveness.

Image credit: Gao, S., Wang, Y., Gao, X., & Hong, B. (2014). Visual and auditory brain–computer interfaces. IEEE Transactions on Biomedical Engineering, 61(5), 1436-1447.

Research question

As part of this project, you will explore the potential of one of the alternatives:

-

c-VEP using covert spatial attention, so still in the visual domain

-

c-AEP, so in the auditory domain

-

c-SEP, so in the somatosensory domain

Specifically, as part of such a project, you will setup and conduct an EEG experiment. Using that recorded data, you will study whether these signals exhibit a level of reliability surpassing chance.

Literature

Visual covert attention c-VEP:

- Narayanan, S., Ahmadi, S., Desain, P., & Thielen, J. (2024). Towards gaze-independent c-VEP BCI: A pilot study. Proceedings of the 9th Graz Brain-Computer Interface Conference 2024. DOI: https://doi.org/10.3217/978-3-99161-014-4-060

Auditory c-AEP:

- Scheppink, Ahmadi, Desain, Tangermann & Thielen (2024). Towards auditory attention decoding with noise-tagging: A pilot study. Proceedings of the 9th Graz Brain-Computer Interface Conference 2024. DOI: https://doi.org/10.3217/978-3-99161-014-4-059

Skills / background required

- Knowledge and an interst in brain-computer interfacing

- Knowledge and an interest in cognitive neuroscience

- Very proficient in Python