Auditory and tactile code-modulated BCI

Context

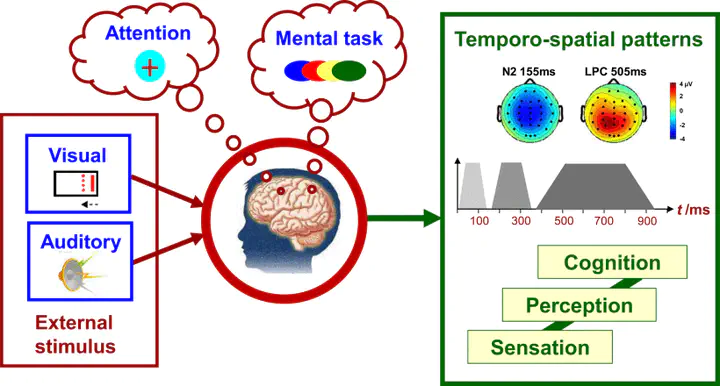

A brain-computer interface (BCI) harnesses a diverse array of control signals, decodable from measured electroencephalogram (EEG) data. An exemplary control signal within this realm is the code-modulated visual evoked potential (c-VEP), which manifests as a response to a pseudo-random sequence of visual flashes. Recognized as one of the most accurate and fastest BCIs in current literature, the c-VEP BCI holds considerable promise for communication and control applications, for instance for motor-impaired individuals such as people living with amyotrophic lateral sclerosis (ALS).

Nevertheless, as ALS progresses, patients may encounter challenges such as the loss of the ability to maintain open and fixated eyes or a decline in visual acuity. Hence, it becomes imperative to explore alternative sensory modalities beyond the visual domain. Notably, the auditory and tactile domains present themselves as viable alternatives. However, these avenues remain largely unexplored in the context of code-modulated paradigms. Investigating the potential of auditory and tactile modalities could pave the way for enhanced BCI accessibility and effectiveness, particularly in addressing the evolving needs of ALS patients in advanced stages of the condition.

Image credit: Gao, S., Wang, Y., Gao, X., & Hong, B. (2014). Visual and auditory brain–computer interfaces. IEEE Transactions on Biomedical Engineering, 61(5), 1436-1447.

Research question

This project aims to explore the potential of code-modulated auditory evoked potentials (c-AEP) and/or code-modulated somatosensory evoked potentials (c-SEP), as measured through EEG, as reliable control signals for BCI. Specifically, we seek to ascertain whether these signals exhibit a level of reliability surpassing chance.

For example, in the auditory domain, the investigation involves the presentation of two (or more) audio streams (e.g., speech), each directed to one ear, where the task is to decode from the EEG which audio stream is attended to (cq. auditory attention decoding). Meanwhile, in the tactile domain, stimulation could occur through a vibrotactile device applied to both hands. Interestingly, such task setups introduce lateralization due to the distinction between left and right, leading to anticipated changes in the response loci. These variations hold potential for integration into machine learning methodologies, thereby enhancing the decoding performance of the BCI, as well as investigating other sources of information such as alpha-lateralization.

Literature

- Scheppink, Ahmadi, Desain, Tangermann & Thielen (2024). Towards auditory attention decoding with noise-tagging: A pilot study. Proceedings of the 9th Graz Brain-Computer Interface Conference 2024. DOI: https://doi.org/10.3217/978-3-99161-014-4-059

Skills / background required

- Very proficient in Python

- Proficient in machine learning

- Knowledge/interest in auditory/tactile neuroscience