Deep representation learning

Using deep learning to obtain embeddings for EEG signals.

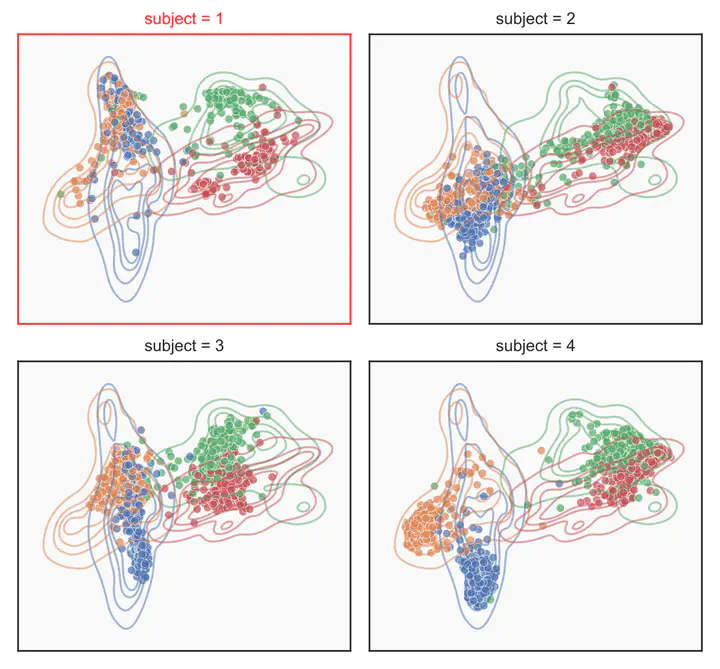

Projected view of embedding vectors for a motor imagery task

Projected view of embedding vectors for a motor imagery task

Summary

Embedding vectors are lower-dimensional representations of data elements. Such vectors are typically easier to process than raw data elements. The embedding functions can both be learned in a supervised way, for example using metric learning methods, and in an unsupervised way, for example with autoencoders.

The research goals of deep representation learning in BCI are twofold. First, to develop embedding and introspection methods, including the transfer of methods from the image and language domains that tend to drive the field, as well as the development of EEG/BCI-specific methods. Second, to explore and test new usage scenarios for such embeddings in BCI.

Significance

Embedding vectors have several usage scenarios in BCI. One of the most common is transfer learning, where embeddings are learned on a pre-recorded dataset (labeled or not) and then used as a starting point for a new BCI session or subject or task. This can be done through simple linear probing or whole network fine-tuning. In both cases, using pre-trained representations of EEG signals allows for shorter calibration phases before online BCI usage. The pre-trained representations can also be made robust to factors not relevant to BCI tasks using techniques such as data augentation or domain-specific pre-training. Finally, a fine-tuning will focus on solving the BCI task.

Unsupervised methods stand out as they can learn embeddings in a data-driven manner. This particularity suggests that using the embeddings they produce can make it easier to test new BCI tasks. They might even allow to discover new features in EEG signals.

Another usage scenario is to facilitate introspection of EEG signals. (1) Embedding vectors can be conveniently projected into 2D or 3D space for visualization purposes. These visualizations provide quick overviews of the distributions of entire datasets, making it possible to identify clusters or non-stationarities in recordings at a glance. (2) As comparing embedding vectors is a speedy process and is meant to indicate how similar examples are, it can facilitate the retrieval of analogous examples from databases.

Key publications / awards