Reinforcement learning under high noise and uncertainty

Evaluation and improvement of reinforcement learning (RL) approaches to learn control strategies under challenging conditions

Context

Reinforcement learning (RL) methods interact with a system in closed loop in order to learn suitable action strategies. The goal of this interaction is to control the system, e.g., to bring it into a desired state. Most RL approaches, however, assume that the state can be estimated rather precisely (cp. to agents performing tasks in simulation environments).

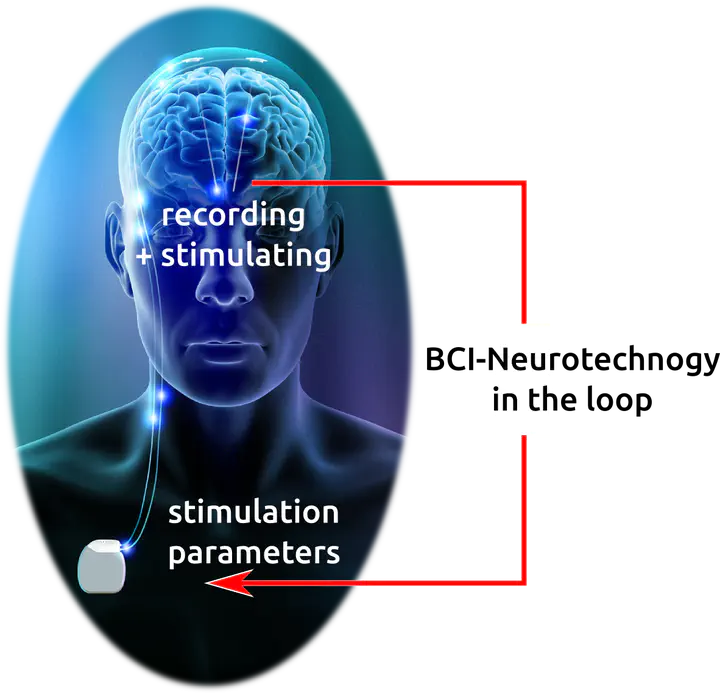

We have ample possibilities to interact with a brain or neural tissue, e.g., by simply playing natural stimuli to a participant (tones, visual stimuli) or by applying electrical / magnetic / optic stimulation to neurons. However, goal-directed interaction with the brain in closed loop is specifically challenging, as this “system” exhibits a few characteristics (noisy state estimates, history-dependent results of actions, non-stationarity) that are extremely challenging for standard RL algorithms. However, future closed-loop applications of neurotechnology will require a fast learning of a brain-state dependent stimulation strategy, e.g., in order to realize better treatment of patients with Parkinson’s, major depression, anxiety disorder, or to support the rehabilitation training after stroke.

Research question

Based on a simulation environment, which implements some of these challenging characteristics, standard RL algorithms and modified RL approaches shall be compared in order to identify model classes which are best suited for follow-up closed-loop experiments in neurotechnological applications. The performance of these model classes shall be compared also to baseline approaches like PID control.

Skills required:

- Control theory

- Reinforcement learning

- Good programming skills in Python (familiarity with numpy, sklearn, at least one popular deep learning framework).

- Good mathematical background and intuition.