Pushing the Frontier of EEG Foundation Models Applications

Evaluating large-scale pre-trained foundation models on novel exploratory tasks

Problem

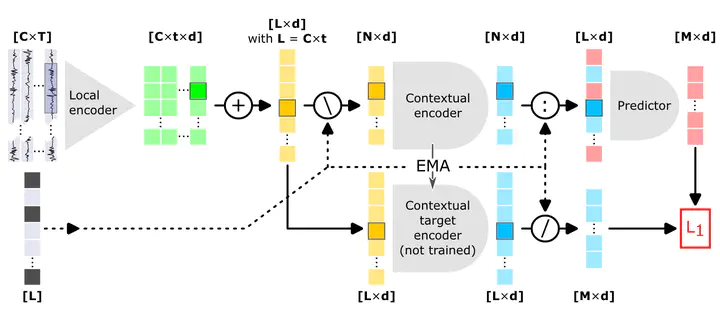

The “BERT moment” for EEG has arrived. Recent Foundation Models (such as LaBraM, BIOT, or BenDR) have been pre-trained on massive, diverse datasets to learn universal neural representations. While these models show good results on standard, public benchmarks, their “frontier” utility remains unclear. We don’t yet know how well these models generalize to novel, non-standard tasks that don’t follow the typical BCI paradigms. In the realm of exploratory research, where data is often scarce and tasks are unconventional, can a foundation model truly provide a “zero-shot” or “few-shot” advantage?

Objective

This project aims to bring EEG foundation models into frontier experimental research: students will evaluate, adapt, and validate pretrained EEG models on novel tasks using private datasets collected in the lab or provided by collaborators. The goal is to characterize model generalization and robustness, and identify failure modes.

Tasks

This project will involve:

- Curating and preprocessing private EEG datasets, and their metadata.

- Evaluating pretrained EEG foundation models on these tasks and quantifying generalization and robustness.

- Implementing well-established decoding methods as baselines.

- Analyzing failure modes, montage sensitivity, and characterizing what limits their generalization ability.

- Documenting experiments, producing reproducible code, and reporting results.

Skills required

- Good programming skills in Python

- Experience with the pytorch library

- Optional: Experience with EEG data analysis and BCI concepts

- Optional: Experience with EEG libraries such as MNE-Python or Braindecode